Bun vs Node Performance

Drop-In Node to Bun Perfomance Evaluation

Related: Fooselo - Project

Overview

Following post captures performance measurements after migrating a Node.js project to Bun as a drop-in replacement. The goal is to evaluate real-world performance differences across common development tasks.

Goals

Measure and compare performance of:

- Install packages (cold & warm cache)

- Run unit tests

- Build client application

- Generate API client

Metrics Captured

- Median runtime (seconds) and standard deviation (via repeated runs)

- Wall-clock runtime per run

- Peak memory (Maximum resident set size in MB)

- Cache state (cold = cleared caches, warm = after first run)

- Environment metadata (OS, CPU, RAM, Node version, pnpm version, Bun version, date/time)

Environment

Baseline (Node.js + pnpm)

- Date: 2026-01-08

- OS: Fedora 43 (Linux kernel 6.17.12-300.fc43.x86_64)

- CPU: 11th Gen Intel(R) Core(TM) i7-11800H @ 2.30GHz (8 cores, 16 threads)

- RAM: 31GB

- Node.js: v25.0.0

- pnpm: 10.20.0

- Disk: NVMe SSD

After Migration (Bun)

- Date: 2026-01-08

- Bun: 1.3.5

- (Other environment same as baseline)

Measurement Methodology

Tools Used

- hyperfine: For stable repeated timings with statistical analysis

- Install:

sudo apt install hyperfineorcargo install hyperfine

- Install:

- GNU time: For memory usage measurements

- Command:

/usr/bin/time -v

- Command:

Process

- Run each command multiple times (warmup: 3, runs: 10)

- Capture median, mean, and standard deviation (hyperfine)

- Capture peak memory for at least one representative run (

/usr/bin/time -v) - Test both cold (cleared caches/node_modules) and warm (cached) scenarios

- Record exact command lines used

Commands Run

Use the automated script: scripts/bench/measure.sh

# Baseline (pnpm)

./scripts/bench/measure.sh "pnpm-install-cold" "rm -rf node_modules && pnpm install"

./scripts/bench/measure.sh "pnpm-install-warm" "pnpm install"

./scripts/bench/measure.sh "pnpm-test-client" "pnpm nx test client --skip-nx-cache"

./scripts/bench/measure.sh "pnpm-build-client" "pnpm nx build client"

./scripts/bench/measure.sh "pnpm-codegen" "pnpm nx run generated-api-client:codegen"

# After Bun migration

./scripts/bench/measure.sh "bun-install-cold" "rm -rf node_modules && bun install"

./scripts/bench/measure.sh "bun-install-warm" "bun install"

./scripts/bench/measure.sh "bun-test-client" "bunx nx test client --skip-nx-cache"

./scripts/bench/measure.sh "bun-build-client" "bunx nx build client"

./scripts/bench/measure.sh "bun-codegen" "bunx nx run generated-api-client:codegen"Results

Package Installation

| Variant | Cache State | Median (s) | Stddev (s) | Peak RSS (MB) | Notes |

|---|---|---|---|---|---|

| pnpm | cold | 4.023 | 0.103 | 679.40 | 1544 packages |

| pnpm | warm | 0.837 | 0.015 | 216.61 | Already up to date |

| bun | cold | 1.621 | 0.027 | 224.01 | 3085 packages installed |

| bun | warm | 0.178 | 0.006 | 205.94 | No changes |

Cold Install Speedup: 2.5x faster (59% time reduction)

Warm Install Speedup: 4.7x faster (79% time reduction)

Unit Tests (client)

| Runtime | Median (s) | Stddev (s) | Peak RSS (MB) | Notes |

|---|---|---|---|---|

| pnpm/node | 5.496 | 0.138 | 230.44 | 53 tests passed |

| bun | 4.460 | 0.191 | 225.08 | 53 tests passed |

Test Speedup: 1.2x faster (18% time reduction)

Build Client

| Runtime | Median (s) | Stddev (s) | Peak RSS (MB) | Notes |

|---|---|---|---|---|

| pnpm/node | 1.934 | 0.032 | 188.75 | With Nx cache |

| bun | 0.934 | 0.015 | 189.83 | With Nx cache |

Build Speedup: 2.1x faster (52% time reduction)

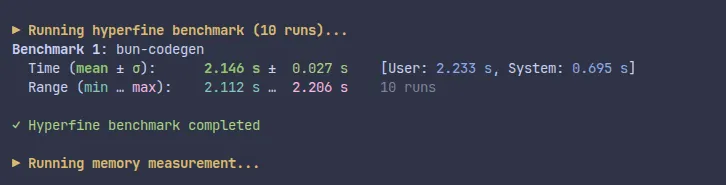

Generate API Client

| Runtime | Median (s) | Stddev (s) | Peak RSS (MB) | Notes |

|---|---|---|---|---|

| pnpm/node | 3.178 | 0.062 | 186.36 | Orval codegen |

| bun | 2.147 | 0.027 | 185.47 | Orval codegen |

Codegen Speedup: 1.5x faster (32% time reduction)

- …

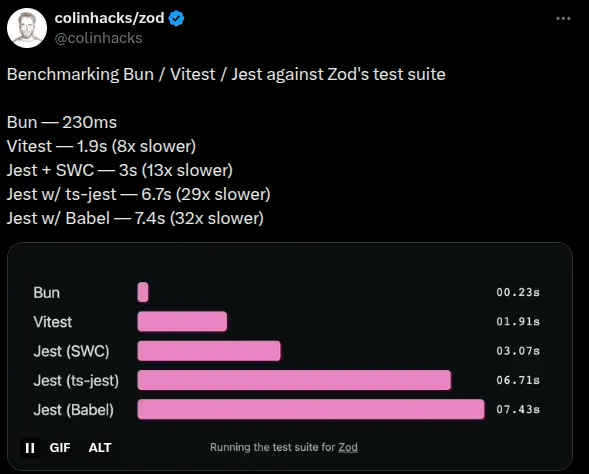

Follow-Up Steps

It is obvious that the main potential for reducing unit test execution time lies not in swapping the runtime. Bun offers a test runner, bun:test, as an alternative to Vitest or Jest. The next step is to migrate the project to bun:test and update the evaluation document.

The outlook is promising:

Sources: x/colinhacks